CHI 2019

The ACM Conference on Human Factors in Computing Systems

COVERAGE FROM SCOTLAND

Georgia Tech Research Integrates Human Capabilities with Machine Advances for Positive Impact in Society

Georgia Tech faculty and students from across the Institute are bringing their broad interdisciplinary research approach and expertise to the Association for Computing Machinery Conference on Human Factors in Computing Systems (CHI 2019), May 4-9 in Glasgow, UK.

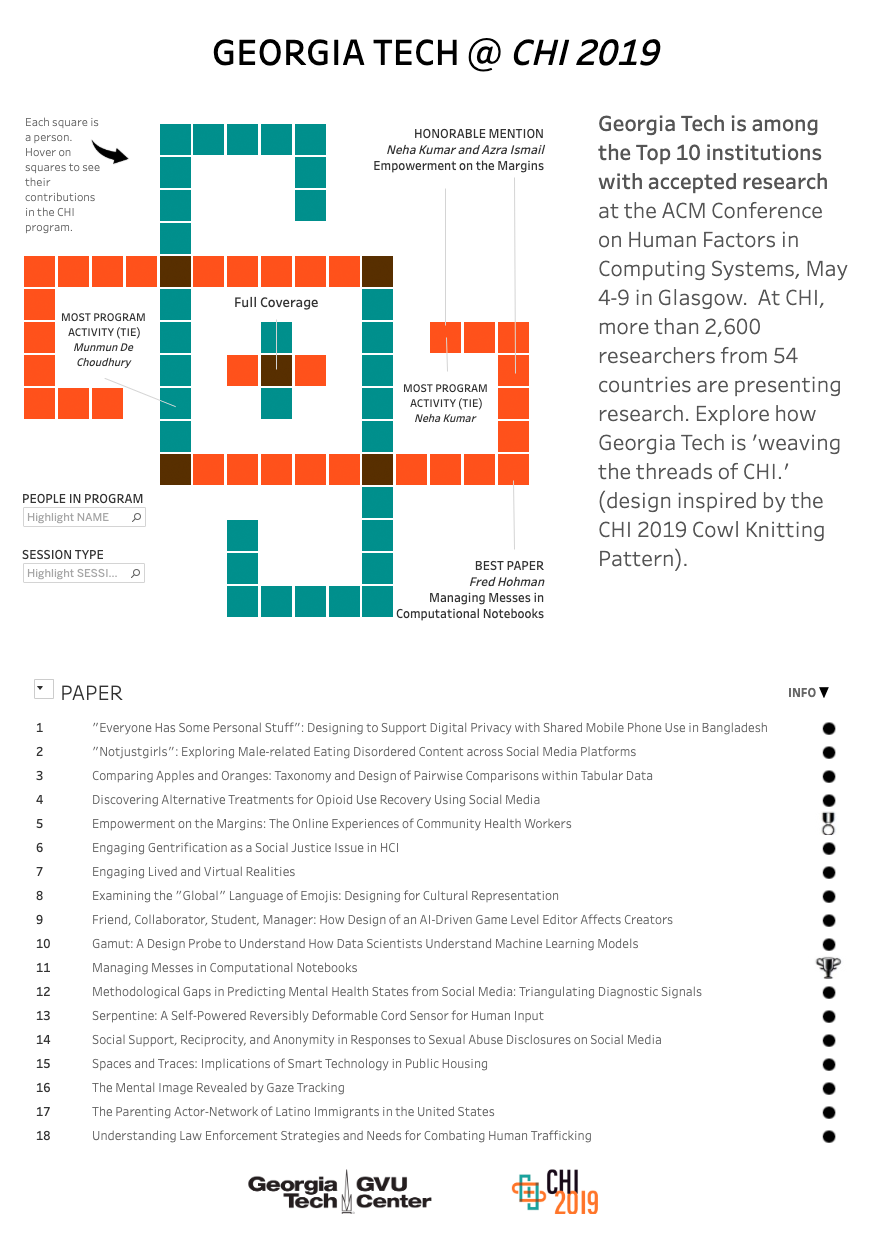

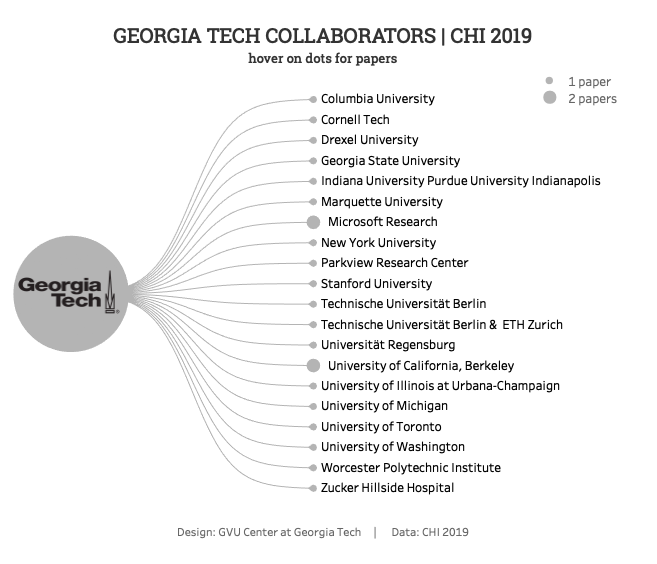

Among more than 700 institutions and 2,600 researchers participating, Georgia Tech is a Top 10 institution with accepted research. This research includes an expansive portfolio of work that uses technology to address tough social issues, such as drug addiction and mental health problems, and builds techniques to advance human capabilities in areas including creativity, advocacy, policy, and education.

Within the technical paper program, Georgia Tech has 18 accepted papers, ranking #7 overall at CHI. Included is a best paper (Managing Messes in Computational Notebooks) co-authored by Fred Hohman, Ph.D. student in computational science and engineering and computer science, and an honorable mention best paper (Empowerment on the Margins: The Online Experiences of Community Health Workers) from Azra Ismail, Ph.D. student in human-centered computing, and Neha Kumar, assistant professor in the School of Interactive Computing and Sam Nunn School of International Affairs.

Two women, Kumar and Munmun De Choudhury, also an assistant professor in the School of Interactive Computing, each have six accepted research contributions to CHI 2019, the most of any Georgia Tech researcher.

Take a look at Georgia Tech contributors and their work through the following data visualization, inspired by the CHI 2019 Cowl Knitting Pattern. Also, current research from all CHI participants with accepted papers across 48 countries can be explored in the three-part data visualization series Weaving the Threads of CHI developed by the GVU Center at Georgia Tech.

Mobile Version

RESEARCH HIGHLIGHTS

Combatting Human Trafficking Through Visualization and Data Systems

The internet can both facilitate and combat human trafficking. Georgia Tech researchers are investigating the role computing might play in mitigating the crime, which affects some 30 million victims, and which has increasingly become a big data research problem. Julia Deeb-Swihart, a Ph.D. student in computer science, interviewed law enforcement analysts, detectives, and others investigating human trafficking in U.S. and Canadian cities to better understand their computing needs and how computer scientists can design more supportive tools.

The research team highlighted three areas where computing can help fight human trafficking (which includes victims sold for labor and sex):

- visualizing location data as traffickers move victims across jurisdictions

- merging information databases

- modernizing information systems

“We’re at a point where some traditional policing methods aren’t working anymore,” said Deeb-Swihart. “How do we take [those] existing methods and support [law enforcement] with technology? We can’t fully replace the methods, but we can support them.”

The paper Understanding Law Enforcement Strategies and Needs for Combating Human Trafficking is co-authored by Professor Amy Bruckman and Assistant Professor Alex Endert, both from the School of Interactive Computing.

Simply Thinking About an Image May Allow Computers to Retrieve a Digital Copy of It

When humans try to recall an image from memory, they involuntarily move their eyes in a “gaze pattern” that is similar to when they are actually looking at the image. James Hays, an associate professor in the School of Interactive Computing, is using web cams and machine learning techniques to track gaze patterns to determine what picture someone is thinking of, and then to retrieve that image from a computer or the cloud.

Researchers recorded the eye movements of 30 participants as they looked at 100 different indoor and outdoor images. Participants were then asked to look at a blank screen and try to recall any of the images. Participants also visited a mock museum with 20 posters while wearing a headset with eye-tracker. Afterward, they looked at a blank whiteboard and were asked to recall as many of their favorite images as possible. Participants, on average, remembered between five to 10 of the museum images.

The results from both experiments, in the paper The Mental Image Revealed by Gaze Tracking, indicate that an individual’s gaze pattern while looking at an image contains a unique signature that computers can use to accurately determine the corresponding photo.

Using AI Agents as Equals in the Creative Process Takes on Many Forms

Georgia Tech researchers, led by Matthew Guzdial, Ph.D. student in computer science, have developed a tool with a built-in AI designer for Super Mario Bros.-style games to see how humans might interact with an AI while developing video game levels. Through two studies – one to develop the tool and a second to evaluate how people used it – the research team found people were receptive to working with an AI to develop a game, but the perceived value of the AI depended on the role the AI played and the interactions that took place.

The AI roles that were identified after close to 100 participants interacted with the tool included:

- friend

- collaborator

- student

- manager

The roles came about based on the designers’ own artistic styles, how designers attempted to employ the AI, and their reactions to the AI’s behavior. The co-design of game levels occurred in a turn-based manner, in which the human and AI designers took turns making changes to an initially blank level within the same level editor interface. AIs could only make additions to the game level while humans were able to delete the AI design elements.

The work is published in the paper Friend, Collaborator, Student, Manager: How Design of an AI-Driven Game Level Editor Affects Creators. Pictured: Guzdial with advisor and co-author Mark Riedl, associate professor in the School of Interactive Computing.

Cleaning Up Messy Computational Notebooks Just Got a Lot Easier

Georgia Tech, University of California Berkeley and Microsoft Research have developed a set of tools to help programmers and data scientists clean up their computational notebooks so they can program more effectively and efficiently.

The set of tools, called code gathering tools, allow users to go to any part of a long notebook to find, for example, a certain variable or equation hidden in messy code, and pull out the relevant information. The tool also helps with reproducibility, sharing code, and communications by helping analysts find, clean, recover, and compare versions of code in cluttered, inconsistent notebooks.

“Programming in computational notebooks is helpful for seeing intermediate pieces of code and results interlaced together, but often these notebooks become very long and messy. This likely resonates with many students, but also data science and industry professionals, since it is a widely used technology,” said Fred Hohman, Ph.D. student in computational science and engineering and computer science and co-author on the work.

The research is detailed in the CHI Best Paper, Managing Messes in Computational Notebooks. Also read about Hohman’s other CHI paper on Gamut, which uses visualization to help data scientists interpret models.

Machine Learning Research Fills in Blanks, Reveals Risks of Self-Treating Opioid Addiction

Whether it’s a lack of insurance, to avoid stigmatization, or some other driver, there are lots of reasons people struggling with opioid addiction might turn to alternative, clinically untested treatments.

Often developed and promoted through online communities, there is little empirical data about these treatments, how people use them, what side effects there may be, and how effective they are as treatments for opioid addiction.

Georgia Tech has taken a big step toward filling in some of these blanks with new human-centered computing research in Discovering Alternative Treatments for Opioid Use Recovery Using Social Media, co-authored by Stevie Chancellor, Ph.D. student in Human-Centered Computing, and Munmun de Choudhury, assistant professor in the School of Interactive Computing.

Using machine learning techniques, researchers examined 1.44 million Reddit posts to identify the 20 most common self-treatments for opioid addiction being used without professional medical consultation. The findings also indicate that these treatments offer risky results, possibly substantial side effects, and a high chance of abuse for those struggling with recovery.

Control Your Smartphone by Plucking and Twisting on Your Clothes

Led by Fereshteh Shahmiri, Ph.D. student in computer science, GT researchers have developed a self-powered sensor, called Serpentine, that could offer improvements in accessibility for visually-impaired users of a standard voice-to-phone interaction.

Led by Fereshteh Shahmiri, Ph.D. student in computer science, GT researchers have developed a self-powered sensor, called Serpentine, that could offer improvements in accessibility for visually-impaired users of a standard voice-to-phone interaction.

Worn as a drawstring on a hoodie or perhaps a necklace, Serpentine’s flexible, twistable, stretchable, and squeezable nature enables a broad variety of input commands when programmed to an external device like a smartphone.

Consider environments like a crowded and noisy train station or quiet environments like a doctor’s office, or perhaps a bank or ATM where private information must be provided. In these instances, a standard voiceover interaction is inconvenient for visually-impaired users, who are unable to otherwise interact with their device through the interface on the screen. By exercising one of Serpentine’s many interactions – pluck, twirl, stretch, pinch, wiggle, and twist – users can offer any of a set of pre-programmed commands to their device.

This work is published in the paper Serpentine: A Reversibly Deformable Cord Sensor for Human Input. Pictured: Shahmiri with advisor and co-author Gregory Abowd, Regents’ Professor and J.Z. Liang Chair in the School of Interactive Computing.

Weaving the Threads of CHI

A Data Interactive Series on CHI's Technical Papers

Part 1: Top 20 Keyword Topics ▼ | Part 2: Authors and Countries | Part 3: Award Papers

PODCASTS

Gear up for CHI with the GVU Center's premiere episode of the Tech Unbound podcast, featuring Explainable AI. Peoples' comfort with and confidence in artificially intelligent (AI) agents is just as important as the technology itself, and Upol Ehsan, Ph.D. student in computer science, discusses his recent research in Explainable AI from the ACM IUI Conference. He will present related work at the CHI 2019 Workshop on Human-Centered Machine Learning, along with advisor Mark Riedl from the School of Interactive Computing.

In the late 1990s, the United States saw a sharp increase in the number of opioid overdose deaths – rising by nearly 600 percent between 1999 and 2017, according to data provided by the CDC. School of Interactive Computing Ph.D. student Stevie Chancellor will present a paper on this subject at CHI 2019. What exactly do the addiction support communities entail? What alternative strategies are people pursuing in recovery, and why? How can we ensure that clinicians are well-informed about the types of self-treatments being used outside of their care? Listen to how Chancellor and a larger team are to helping mitigate the opioid crisis.

Be a part of the Georgia Tech conversation at CHI 2019:

#CHI2019

GVU Center | @gvucenter

School of Interactive Computing | @ICatGT

Story: Alyson Powell Key, Allie McFadden, David Mitchell, Kristen Perez, Joshua Preston, and Albert Snedeker

Interactive Graphics: Joshua Preston

Podcasts: Ayanna Howard, Joshua Preston, David Mitchell, and Tim Trent

Web Design: Joshua Preston